Quite an interesting day finally came to an end. AxonIQ, which is the company behind the Axon framework and server, hosted their annually conference in Amsterdam. Albeit being a vendor-centric conference, the event also hosts a couple of talks from high-rated speakers from all around the globe that spread general wisdom wrt. event-driven systems. Even if you are not that interested in the Axon platform itself, there is a lot to learn from the talks and workshops offered at the Event-driven Microservices Conference. This year marks my second visit in a row and I would like to share my two cents on the event.

Your architectural choices will shape interaction as well as culture

The opening keynote was given by Alberto Brandolini. If you happen to work on event-centric systems, you most likely came across that name already: Alberto is an avid speaker and writer in the field of software architecture and software methodology. He invented the event storming method as an exploratory approach for defining event models that both business and engineering understand. If you are interested in the method, I suggest to take a look at his book on the event storming method, which can be purchased over at Leanpub. In his talk, The Gordian Knot - Hatching Software Development Ecosystems, Alberto talked about the guiding principles that form highly motivated and efficient teams of developers - and what stands in the way of achieving this. The culture of the company plays a large contributing factor, whether you want to introduce a DevOps mindset or try to create a trusted environment, where failures are considered an essential part of the overall journey and do not result in playing the blame game across your organisation. But culture is often treated orthogonal and independent from other concerns. People accept it as it is. Alberto clearly argumented against that notion and gave a nice definition of the term culture and what it really is about:

Culture is the cumulative effect of a winning behavior within a given context.

It was interesting to see how he applied the Pink check (see Drive: The Surprising Truth About What Motivates Us) to a couple of case studies wrt. to the key components of intrinsic motivation: autonomy, mastery, and purpose. Autonomy is driven by the need to control what you do, when you do it and how you do it. According to Pink, being autonomous is an enabler for creative solutions as there are no external constraints that limit the design space. Mastery on the other hand is driven by the desire to improve. Of equal importance is surely purpose: People may become demotivated at work if they do not grasp the bigger picture. Alberto discussed this components along many case studies, ranging from projects at workplaces with strict hierarchies and rule sets that developers need to comply with to, let us say, a bit more relaxed environments. He argues that reducing constraints is a key factor that contributes to a higher autonomy, and establishing bounded contexts is a means to do so. Extending on this line of argument, event storming is not only a formidable tool to explore your business domain and get an understanding of your bounded contexts, but also makes the purpose of the software for a company directly visible. Always bear in mind however that the organisation is not orthogonal to the code. Your architectural choices will shape interaction as well as culture.

What happens after the honeymoon phase is over?

Another talk that stood out in my opinion was given by Joris Kuipers: Day 2 problems in CQRS and Event Sourcing. Joris is a long-time user of the Axon platform and shared his insights on the challenges that event-sourced systems built with Axon bear after going into production. These are not only challenges specific to Axon-powered systems, but apply to event-driven architectures in general. After going into production, the business may change its mind and the software needs to be re-aligned with business goals requiring a potential remodelling of your aggregate design. What used to be a Liquibase migration script in a traditional system is now a handmade tool firing custom commands to facilitate the update.

But what can actually change? New events may be introduced to the system. The problem is, that an old version of your application (or an external event processor that you are not aware of) does not recognize the events and is thus unable to handle them properly. Joris gave a nice quote that is inspired from Postel's Law:

Be conservative in what you publish publicly, be liberal in what you accept from your past and future self (Kuipers Events Corollary).

This means to rather ignore what you do not know instead of throwing exceptions for unknown event types or unexpected fields. This, however, requires forwards compatibility from old versions of your application. In my personal experience, this aspect of software design is often neglected, so I cannot reinforce this enough: Even though it is hard to get right and requires a significant amount of testing, you should invest the time to design for forwards compatibility. Joris also recommended to tag event types and have a stable set of base fields for your events, so that event processors can still work on them even in case of a version mismatch. Of course, this works very well in a situation where events are used as a signaling mechanism to another service. Having the signaling use case in mind, Joris advocates the notify-and-fetch pattern as a robust design principle. The notify-and-fetch pattern basically states that you signal a change in your domain to other services and if these services need to act on the altered data, they send an inquiry back to the service that emitted the event in the first place to get details on the state changes. Although I do see the point in his argumentation, I am not sure if I fully agree. Notify-and-fetch is in my opinion only a viable solution if the design would otherwise get more convoluted. Let me show you an example (adapted from Allard Buijze talk).

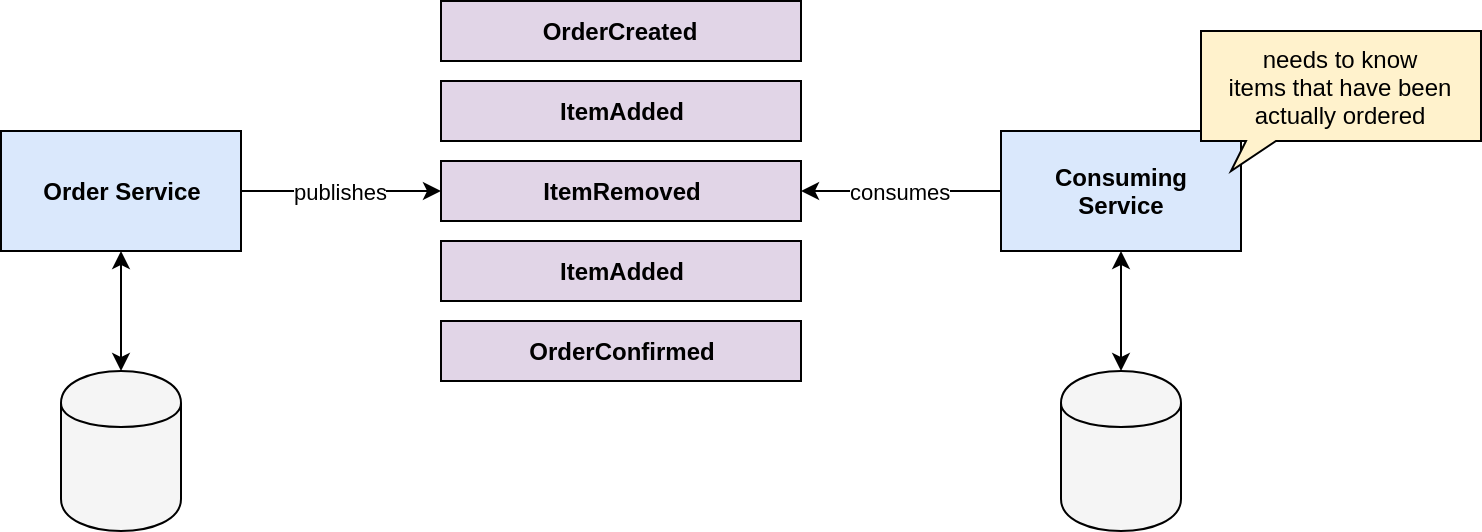

The order service illustrated in the diagram above publishes a holistic set of events centered around orders. On the consuming side, we have a system that implements some business logic for ordered items. The consuming service has to consume all of the ItemAdded, ItemRemoved and OrderConfirmed events to re-establish the resp. state and only process items that actually have been ordered. This logic is most likely already implemented in the order service. Instead of redundantly implementing business logic, we can use the notify-and-fetch pattern. This is in my opinion a perfect use case for it, since it helps us to sharpen the collaboration between both services in terms of exchanged events. Consider the refined solution underneath.

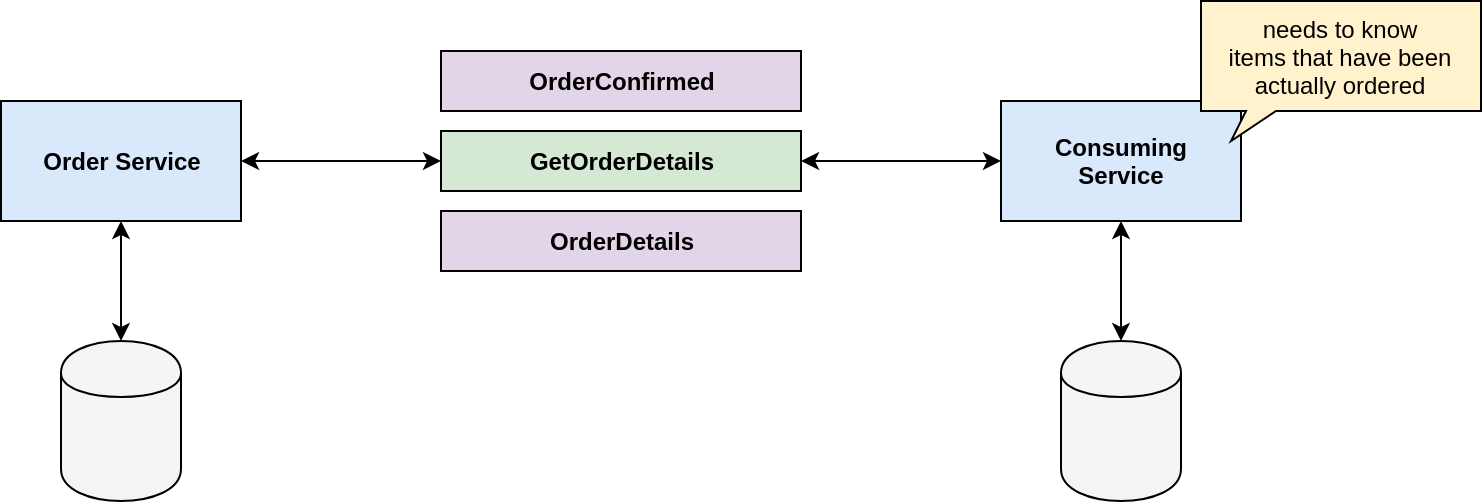

The order service may still use all of the aforementioned events for its internal event-sourced design, but it publishes more coarse-grained events to other services. In this case, it simply publishes an OrderConfirmed event that might just close around an order ID. The consuming service emits a GetOrderDetails event, which in turn incites the order service to publish the details to requested order. Please note that GetOrderDetails and OrderDetails do not have to be modelled as events. This could be done using a synchronous operation invoked via an HTTP API as well.

Joris also talked about the expansion of existing events or aggregates and adding new query projections. I will not go into the details at this point. The gist is that you need to carefully consider how your event-sourced application shall evolve. Plan for the added complexity, especially wrt. your deployment strategy, as zero-downtime deployments are very hard if not close to impossible if you commit to this architectural style.

Monolithic vs. microservices

Be sure to know that the remaining talks were also quite interesting. The closing talk by Allard Buijze touched a topic close to my heart. It centered around the question: What makes you think you can build a thriving microservice architecture, if you cannot build a decent monolith first? I completely stand behind this. Build a modular monolith instead and see how far this approach actually takes you. Most of the time, you will be just fine. And if not: Modularization along the axis of your business requirements is a necessary prerequisite before you are able to break apart any monolith. Already having that by being disciplined wrt. the bounded contexts of your domain safes you a lot of hassle when migrating piece-by-piece to a microservice architecture.

Whether your approach is monolithic or applies microservices, the Axon platform does a lot of the heavy lifting for you either way and comes with a nice programming model that integrates well with Spring-based applications. Be sure to give it a try.